Validity literally translated from English means “stable, suitable, appropriate.” In scientific circles, this term is used as an indicator that evaluates the suitability of a particular research technique in a specific situation or conditions.

Validity is often put on the same level as the concept of “reliability”. This criterion determines the relevance and effectiveness, reliability of the selected research methods: what and how they measure and characterize, what picture they reflect, why the assessment is made.

The closer the researcher gets to the main feature of the problem, which makes it possible to assess its nature and impact using a certain technique, the higher its validity. The process of checking the effectiveness (quality) and effectiveness and applicability of research methods is called validation.

What is validity in psychology

Validity is an important characteristic of psychological tests and techniques. It must be verified by experimenters along with the reliability of the technique. The validity criterion is most often used in psychodiagnostics. It reveals the problem of compliance of the data obtained during the study with the “ideal”. That is, those that are not distorted by any internal or external factors.

The problem of subjectivity is clearly expressed in psychology. No matter how accurate, in the opinion of the experimenter, the obtained data are, they are distorted. To check the level of reliability of the acquired knowledge, a validity criterion is used. Validity is not used in the exact sciences: physics, chemistry, mathematics.

This is a unique criterion of psychology that allows us to smooth out the difficulties of obtaining objective knowledge. The first reason for the appearance of this tool is the problem of accurately determining the characteristic or property being studied. Thus, when studying anxiety, it is impossible to unambiguously establish the phenomenon being diagnosed. Anxiety is fear, worry, and worry.

The second reason is the subjectivity of the parameter being studied using a psychodiagnostic technique. The developer puts his own meaning and meaning into the wording, but this does not mean that the subject thinks according to the same template. Interpretations of the same questions or statements can vary greatly.

In the exact sciences there is no problem of defining the object under study. The difficulty lies in the methods of study. For example, a physicist studying the parameters of an iron ball sees and touches it accurately. He set himself a goal: to study the radius of the ball. The parameter is objective and is found using measurements and formulas.

Main types: operational and constructive

Allows you to evaluate the substantive planning of the study.

Operational validity shows the consistency of the methods and experimental design with the hypothesis being tested. Determines the degree of compliance of the statements under study with the theoretical provisions underlying the organization and conduct of this experiment. Its assessment is related to the success of the transition from the formulation of hypotheses to the choice of methodology.

Construct validity involves looking for factors that explain test-taking behavior. Associated with a theoretical construct in itself. It is part of operational validity.

The first step is to describe as completely as possible the construct that will be measured. This is done by formulating hypotheses about it that prescribe what it may or may not correlate with. These hypotheses are then tested.

What is the validity of the methodology

A methodology, in contrast to a method, is a set of specific actions of a specialist aimed at achieving a corresponding result. The research method may include several techniques. For example, the survey method according to the classification of B. G. Ananyev can be carried out using different test questionnaires.

Validity in psychology is the correspondence of the integrity of the psychodiagnostic method and its individual parts to the mental characteristic being studied.

The PDM may include several scales. For example, a test questionnaire that determines the level of neuroticism-psychopathy consists of the following scales: psychopathization, neuroticism and the “lie” scale. The third measuring scale is used to test the sincerity of the subject. The most common reason for lying is the motivation of approval. This factor greatly distorts statistical and individual data.

A valid PDM is a technique that diagnoses only a narrow range of characteristics specified by the experimenter. It enjoys great confidence among specialists and is used in scientific research. The higher the validity coefficient, the more reliable the data obtained during the experiment.

Threats to internal and external validity

The main factors influencing internal validity indicators:

- background events interfering with experiments;

- natural temporary changes inherent in the object/subject of research;

- incorrectly selected research methodology;

- unstable results due to the high level of error of the selected tools;

- the interaction of several factors directly affecting internal validity;

- subjective biases of the experimenter (unwillingness to take into account details, incorrect accounting of the results obtained, inaccuracy/carelessness, etc.).

What threats reduce the level of external validity?

- The interaction of poor-quality selection of research materials and the selection of a methodological basis for them.

- Reactive effect, as a change in the susceptibility of the subject of research after preliminary testing.

- Mutual interference that occurs after the simultaneous influence of several research methods on one object.

What is test validity

A psychological test is a type of psychodiagnostic technique. The test is most popular among experimenters due to its ease of use. The researcher's kit includes stimulus material, answer forms, and instructions.

Test questionnaires can examine such mental characteristics of the subject as stress resistance, intelligence, motivation and tolerance. Questions are formulated taking into account the specifics of the target samples and the purpose of the study.

They can be closed - the subject is asked to choose one of the statements; open - the subject must answer the question himself or comment on the statement; indirect – being generally known facts or opinions; direct – which directly opposes the individual’s opinion.

The validity of the test ensures the reliability of the data on mental phenomena obtained with its help. Without experimentally proven high validity, the test cannot be considered effective. If the performance of a separate task or the test itself captures the mental phenomenon being measured, then validity takes on a high value.

This means that the test is valid and reliable, since influences on the test subject are excluded. The validity of the test can be confirmed without having the deepest knowledge of psychology.

For example, 1st year students can check the test with the help of subjects. Experts are asked to evaluate the clarity of the wording of questions or statements based on personal life experience. Based on the data obtained, we can talk about the validity of the questionnaire.

Reliability and validity

Photo Girl with red hat on Unsplash

Validity is an important indicator of the reliability of the results obtained. A flawless experiment must have impeccable validity and reliability. Reliability, like validity, determines the quality and suitability of the developed methodology for use in practice.

The validity indicator is the experimenter’s confidence that, using a given technique, he measured exactly the required characteristic or parameter. For example, a test to determine temperament should measure temperament, and not another quality of a person's personality.

Psychometric properties of psychodiagnostic methods

The psychometric basis of any technique is scales. The concept of “scale” is interpreted in a broad and narrow sense: in the first case, the scale is a specific technique, in the second case, it is a measurement scale that records the characteristics being studied. Each element of the technique corresponds to a certain score or index, which forms the severity of a particular mental phenomenon.

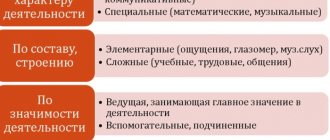

Measuring scales are divided into:

- Metric: interval, ratio scales.

- Non-metric: nominative, ordinal.

| Scale name | Explanation, examples |

| Nominative (scale of names) | Based on a common property or symbol, assigns an observed phenomenon to the appropriate class. The naming scale is the most common in research psychodiagnostic methods. This scale is used, for example, in test questionnaires. The subject's denial or affirmation is compared with the answers in the key. Also, a nominative scale may involve the selection of one or more characteristics from those proposed. |

| Ordinal | Divides the sum of characteristics into elements based on the “more is less” principle. Thus, it arranges the results in ascending or descending order. An ordinal scale is used in the color choice test. The subject is asked to choose one of the squares on a white background, after which the selected figure is put aside and the procedure is repeated. Result: arranged according to the degree of attractiveness for the tested color. Each figure is assigned its own serial number. |

| Interval | The elements are ordered not only according to the principle of severity of the measured characteristic, but also on the basis of the distribution of characteristics by size, which is expressed by the intervals between the numbers assigned to the degree of expression of the measured characteristic. Interval scales are often used when standardizing primary test scores. |

| Relationships | Arranges elements by numerical value, maintaining proportionality between them. Objects are divided according to the property being measured. The numbers that are equated to object classes are proportional to the degree of expression of the properties being studied. Used, for example, to determine the sensitivity thresholds of analyzers. Often used in psychophysics. |

After determining the scale used to form the test, it is necessary to determine the coefficient of the psychometric properties of the technique.

These include:

- Representativeness.

- Standard.

- Reliability.

- Validity.

Representativeness is a property that extends to a sample of subjects. It can characterize both a population and a general population. Representativeness has two parameters: qualitative and quantitative. The qualitative parameter characterizes the choice of subjects and methods of constructing the sample.

A quantitative parameter is the sample size expressed in numbers.

In psychological research, this property determines the extent to which results can be generalized. For example, relationships between men and women are studied. If we take subjects of different ages (schoolchildren, students, adults, pensioners), then the representativeness of such a sample will be low.

However, if the subjects are approximately the same age and field of activity (only schoolchildren, students, adults, pensioners of both sexes), then the representativeness will be high. In psychodiagnostics, representativeness is used to indicate the possibility of applying a technique to the entire population.

Standardization is a simplification of the methodology, bringing parts of the roadmap and application procedures to uniform standards. PDM should be universal and applicable by different specialists in different situations. If the structure of the PDM deviates from the standards, its results will not be comparable with the results of other studies. Non-standardized methods are used mainly for scientific research.

With their help, new mental phenomena are studied. But this technique cannot be used for psychodiagnostic purposes. Another important parameter of the LDM is reliability. It characterizes the accuracy, stability and stability of the results obtained using a specific technique.

The high reliability of the technique eliminates the influence of extraneous factors and significantly brings the experiment closer to a “pure” one. The criterion of reliability and validity are different concepts. Moreover, reliability is interpreted more broadly than validity: reliability > validity.

For example, on a day off a person gets the opportunity to spend time either fishing or hunting. If he decides to go hunting, but takes a fishing rod with him, then his choice will not be valid. However, if a person went hunting with a gun and it misfired, then the chosen method is unreliable.

Convergent and discriminant validity.

The strategy for including certain items in the test depends on how the psychologist defines the diagnostic construct. If Eysenck defines the property “neuroticism” as independent of extraversion-introversion, then this means that his questionnaire should contain approximately equally items with which neurotic introverts and neurotic extroverts would agree. If in practice it turns out that the test will be dominated by items from the “Neuroticism-Introversion” quadrant, then, from the point of view of Eysenck’s theory, this means that the “neuroticism” factor turns out to be loaded with an irrelevant factor - “introversion”. (The exact same effect occurs if the sample becomes skewed—if there are more neurotic introverts than neurotic extroverts.)

In order to avoid such difficulties, psychologists would like to deal with empirical indicators (items) that clearly inform about only one factor. But this requirement is never actually met: every empirical indicator turns out to be determined not only by the factor that we need, but also by others - irrelevant to the measurement task.

Thus, for factors that are conceptually defined as orthogonal to what is being measured (occurring in all combinations), the test writer must employ an artificial balancing strategy in selecting items.

The correspondence of items to the factor being measured ensures the convergent validity of the test. Balancing items against irrelevant factors ensures discriminant validity. Empirically, it is expressed in the absence of a significant correlation with a test measuring a conceptually independent property.

Types of validity

There are many types of validity.

Validity in psychology is divided into several types.

Scroll:

- Internal validity. Characterizes the correspondence of the results obtained to the stimulus material of the psychodiagnostic technique. It determines whether the reactions of the subjects are really determined only by the tasks and record the mental properties being studied.

- External validity . High external validity determines the representativeness of the sample. That is, the compliance of the methodology with the selected categories of the population and the conditions in which it is applied.

- Conceptual validity . Validity in psychology is the consistency of the theory constructed by the experimenter. Determined at the stage of developing the concept of the mental property being studied. The testing of conceptual validity involves a group of expert psychologists who supervise the creation of the concept and methodology.

- Apparent validity . High apparent validity means the applicability of test items to the target sample. Thus, if an experimenter wants to study certain qualities of schoolchildren, then the tasks in the methodology should be formulated taking into account the level of knowledge of the average schoolchild. In addition, apparent validity captures the understandability of formulations based on the socio-demographic characteristics, perceptions and life experiences of the subjects. Statements in questionnaires can be ambiguous. For example, the statement “I start at half a turn” can be interpreted in different ways. There are statements and questions that do not correspond to the life experiences of the subjects. The question of any situation while driving a car will be unknown to people who do not know how to drive.

- Construct validity. Characterizes the correspondence of the method formulations used to the recorded phenomena. High construct validity means that the concepts chosen for the technique (for example, questions or statements in a test) capture the properties that the experimenter wants to explore.

- Predictive validity . Indicates the reliability of the forecast made based on the results of the study using PDM. This type of validity is important for professional selection and diagnosis in psychiatric clinics. High predictive validity allows the expert to be confident in the prescribed course of treatment or the choice of a candidate for a position. Measures the inclinations and prerequisites of the subject for certain qualities and properties. For example, if we formulate predictive validity as a question, it might sound like this: “Will Ivanov be able to become a qualified endocrinologist?”

- Current validity. Records the current state of affairs. Determines the properties and qualities that the subject currently has. If we formulate current validity in the form of a question, as in the example above, it will sound like this: “Is Ivanov a qualified endocrinologist?” Tests often rely on predictive validity. The current one is used as a replacement.

- Convergent validity. Determines the strength of the connection between parts of the same PDM or methods that record the same properties. If the convergent validity of two measures is high, then they are likely to diagnose overall quality. A strong connection must be established between statements or questions in a psychodiagnostic technique that relate to the same scale and capture one characteristic.

- Content validity. Validity in psychology is the correspondence of test items to the diagnosed mental quality. The test should not contain items related to the diagnosis of other properties. However, it may include “lie” scales for high reliability of the results.

- Discriminant validity. High discriminant validity reveals the lack of connection between tasks of the same psychodiagnostic technique or different PDM. Used to test questions and statements, techniques that should study unrelated mental properties.

Validity of research methods

Validity of the method. The validity of a research and diagnostic method (literally means “complete, suitable, appropriate”) shows to what extent the quality (property, characteristic) it is intended to evaluate is measured. Validity (adequacy) speaks of the degree to which the method corresponds to its purpose. The closer it is revealed in the diagnostic

The higher the validity of the characteristic the method is designed to detect and measure.

The concept of validity refers not only to the methodology, but also to the criterion for assessing its quality, the criterion of validity.

This is the main sign by which one can practically judge whether a given technique is valid.

Such criteria could be the following:

- behavioral indicators - reactions, actions and actions of the subject in various life situations;

- achievements of the subject in various types of activities - educational, labor, creative, etc.;

— self-organization, data indicating the completion of various control tests and tasks;

- data obtained using other methods, the validity or relationship of which with the method being tested is considered to be reliably established.

The higher the correlation coefficient of the technique with the criterion, the higher the validity. The development of factor analysis has made it possible to create methods that are valid in relation to the identified factor. Only methods tested for validity can be used in diagnostic activities and recommended for mass educational practice.

There are several types of validity of diagnostic techniques.

Theoretical (conceptual) validity

is determined by the correspondence of the indicators of the quality being studied, obtained using this technique, to the indicators obtained using other techniques (with the indicators of which there should be a theoretically justified relationship). Theoretical validity is tested by correlations of indicators of the same property obtained using different methods associated with the same theory.

Empirical (pragmatic) validity

is checked by the correspondence of diagnostic indicators to real life behavior, observed actions and reactions of the subject. If, for example, using a certain technique we assess the character traits of a given subject, then the technique used will be considered practically or empirically valid when we establish that this person behaves in life exactly as the technique predicts, i.e. in accordance with his character trait.

Internal validity

means the compliance of the tasks, subtests, judgments, etc. contained in the methodology. the overall goal and intent of the methodology as a whole. It is considered internally invalid

or insufficiently internally valid when all or part of the questions, tasks or subtests included in it do not measure what is required from this technique.

External validity -

this is approximately the same as empirical validity, with the only difference that in this case we are talking about the connection between the indicators of the method and the most important, key external signs related to the behavior of the subject.

Apparent validity

describes the subject’s idea of the method, i.e. it is validity from the subject’s point of view. The technique should be perceived by the subject as a serious tool for understanding his personality, somewhat similar to medical diagnostic tools.

Concurrent validity

is assessed by correlating the developed methodology with others, the validity of which in relation to the measured parameter has been established. P. Klein notes that concurrent validity data are useful when there is dissatisfaction with the current methodology for measuring certain variables, and new data are created in order to improve the quality of measurement.

Predictive validity

is established using a correlation between the indicators of the method and some criterion characterizing the property being measured, but at a later time. L. Cronbach considers predictive validity to be the most convincing evidence that a technique measures exactly what it was intended to measure.

Incremental validity

has limited value and refers to the case where one test in a battery of tests may have a low correlation with a criterion but not overlap with other tests in that battery. In this case, this test has incremental validity. This can be useful when conducting professional selection using psychological tests.

Differential validity

can be illustrated using interest tests as an example. Interest tests generally correlate with academic performance, but in different ways across disciplines. The value of differential validity, like incremental validity, is limited.

Content validity

determined by confirming that the tasks of the methodology reflect all aspects of the studied area of behavior. Content validity is often called “logical validity” or “definitional validity.” It means that the method is valid according to experts. It is usually determined by achievement tests. In practice, to determine content validity, experts are selected to indicate which domain(s) of behavior is most important.

\ Construct validity

is demonstrated by describing as completely as possible the variable the technique is intended to measure. Construct validity includes all approaches to determining validity that were listed above.

From the description of the types of validity it follows that there is no single indicator by which the validity of a diagnostic technique is established. However, the developer must provide significant evidence in favor of the validity of the proposed methodology.

It is easy to see the direct connection between validity and reliability. A technique with low reliability cannot have high validity, since the measuring instrument is incorrect and the trait that it measures is unstable. This technique, when compared with an external criterion, can show high agreement in one case, and extremely low agreement in another. It is clear that with such data it is impossible to draw any conclusions about the suitability of the technique for its intended purpose.

Deriving a validity coefficient is a labor-intensive procedure that is not necessary in cases where the technique is used by the researcher to a limited extent and is not intended to be used on a wide scale. The validity coefficient is subject to the same requirements as the reliability coefficient: the more methodologically perfect the criterion, the higher the validity coefficient should be. A low validity coefficient is most often noted when focusing on minor aspects.

Additional requirements for research methods. Accuracy

methodology reflects its ability to subtly respond to the slightest changes in the assessed property that occur during the experiment. The accuracy of a diagnostic technique in a certain sense can be compared with the accuracy of technical measuring instruments. A meter, for example, divided only into centimeters, will measure length more roughly than a ruler graduated in millimeters. In turn, a micrometer, a device that allows you to estimate lengths that differ from each other by 0.001 mm, will be a much more accurate measuring tool than a school ruler.

The more accurate the diagnostic technique, the more perfectly it can be used to evaluate gradations and identify shades of the quality being measured. However, in practical diagnostics a very high degree of accuracy of estimates is not always required. Its necessary practical measure is determined by the task of differentiation, dividing subjects into groups. If, for example, the entire sample of subjects needs to be divided into only two subgroups, then the accuracy of the methodology used must be appropriate.

to strive precisely for this division, no more. If it is necessary to divide the subjects into five subgroups, then it is enough to use a technique that has a five-point measurement scale (for example: “yes”, “more likely yes than no”, “neither yes nor no”, “more likely no than yes”, "No").

Unambiguity

A technique is characterized by the extent to which the data obtained with its help reflect changes in precisely and only the property for which the technique is used to evaluate. If, along with this property, the resulting indicators also reflect others that are in no way related to this technique and go beyond the limits of its validity, then the technique does not meet the criterion of unambiguity, although it may remain partially valid.

For example, if an experimenter is interested in assessments of the motives of a person’s behavior and, in order to obtain them, he asks the subject direct questions regarding the motives of his behavior, then the answers to these questions are unlikely to meet the criterion of unambiguity. They will almost certainly reflect the degree to which the subject is aware of the motives of his behavior, his desire to appear in a favorable light in the eyes of the experimenter, and his assessment of the possible consequences of the diagnostic experiment.

Representativeness

means that the properties of a wider set of objects are represented in the properties of a subset. In diagnostics they talk about the “representativeness of test norms” or the “thematic representativeness” of diagnostic tasks in relation to the “area of validity” of the methodology.

Representativeness of test norms is the correspondence of the boundary points on the distribution of test scores obtained on the standardization sample to similar boundary points that could be obtained on the testing population - on the set of subjects for whom the technique is intended. Usually, when obtaining a normal distribution curve (see Section 9.2), it is concluded that the test norms are representative. However, normality is not a necessary condition for representativeness. Representativeness of test norms can be achieved in the absence of a normal distribution.

Thematic representativeness is a measure of the representation in a set of diagnostic tasks of the subject area to which the methodology is aimed, i.e. the area of behavior in the case of testing psychological properties or the area of knowledge in the case of pedagogical diagnostics.

Diagnostic value

The method is determined by conducting a preliminary experiment with the so-called neutral group, the results of which are not used in further diagnostic work.

\ For example, in the process of processing the result of a preliminary test, all received data are arranged in ascending order and the median is determined, i.e. the value located in the middle of the row. Students who score below the median are considered “weak”; above the median - “strong”.

In addition to the basic ones, there are a number of additional requirements for the selection of diagnostic techniques.

First of all, the chosen method should be the simplest of all possible and the least labor-intensive of those that allow you to obtain the required result. In this regard, a simple survey technique may be preferable to a complex test.

Secondly, the chosen method should be labor-intensive and require a minimum of physical and mental effort to carry out the diagnosis.

Thirdly, the instructions for the method should be simple, short and understandable not only for the diagnostician, but also for the subject, setting up the subject for conscientious, trusting work, excluding the emergence of side motives that could negatively affect the results and make them doubtful. For example, it should not contain words that set the subject up for certain answers or hint at a particular assessment of these answers.

Fourthly, the environment and other conditions for conducting diagnostics should not contain extraneous stimuli that can distract the subject’s attention, change his attitude towards psychodiagnostics and turn it (attitude) from neutral and objective into biased and subjective. As a rule, it is not allowed for anyone other than the diagnostician and the test subject to be present during the diagnosis, music to be played, extraneous voices and other distracting noises to be heard.

Control questions

1. How do criteria and indicators differ? How many criteria and indicators are usually identified?

2. What is called operationalization and verification?

3. How is the objectivity of a research method determined?

4. What is meant by the reliability of a research method? What methods exist for assessing the reliability of a method?

5. What is validity called and by what criteria is it determined?

6. What types of validity are distinguished in the methodology of psychological and pedagogical research?

7. What additional requirements exist for methods of psychological and pedagogical research and their choice?

Practical tasks

1. Analyze the validity of the selection and application of research methods using the dissertation abstracts.

2. Evaluate the research methods that you selected for your research using the criteria highlighted in this chapter.

Recommended reading

Babansky Yu.K.

Selected pedagogical works. - M., 1989.

Valeev G.Kh.

Methodology and methods of psychological and pedagogical research. — Sterlitamak, 2002.

Maksimov V. G.

Pedagogical diagnostics at school: textbook. allowance. - M., 2002.

Slastenin V. A., Kashirin V. V.

Psychology and pedagogy. - M., 2001.

Social psychology: textbook. manual / A.N. Sukhov, A.A. Bodalev, V.N. Kazantsev and others; edited by A.N. Sukhov, A.A. Derkach. - M., 2001.

CHAPTER 9

PROCESSING AND INTERPRETING SCIENTIFIC DATA

DATA

Shoot! But know, I will interpret this... D.A. Leontyev. Odnopsychia

The effectiveness of scientific research is determined by the researcher’s ability not only to collect reliable data, but also to systematize and classify them, identify patterns and present them to the scientific community, as well as for use by practicing teachers. This chapter is devoted to these issues.

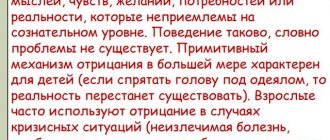

Threats

Validity in psychology is a property of qualitative methodology, but factors may arise that distort a theoretically correctly constructed PDM. Side factors are more pronounced when working with poorly organized stimuli or new, previously unclear tasks for the subject.

The difficulty lies in studying unbalanced and insecure individuals. The main threats to high validity are the special characteristics of the test taker and situational phenomena.

The reliability of the results is reduced by:

- test subject's errors;

- specialist errors;

- errors caused by conditions or incorrect diagnostics.

If the diagnosis does not necessarily require a specialist to be in the room, then his presence may distort the results of the study. Comments and interpretation of test tasks also reduce the reliability of the data obtained.

A subject interested in intentional testing errors or presenting himself in a favorable light to management distorts the diagnostic results. No less dangerous is the psychophysiological state of the person being tested. For example, the individual is very hungry, tired, or suffers from a migraine.

Extraneous noise, voice, and the ability to discuss test tasks with other subjects reduce the accuracy of the results. This applies to errors in diagnostic conditions and procedures.

Validity and reliability of experimental methods

The reliability of the experimental methods carried out by the author confirms the truth of the results obtained. Validity reflects the degree of correspondence of the research results to the phenomenon being studied in the selected scientific field.

Any valid study is reliable by default, but reliable research is not always valid.

Reliability as a stable component of a reliable experiment

Reliability (reproducibility, stability of experimental results) is the ability to reproduce the obtained research results under similar real conditions when correlating the initial/final measurements.

On what does the reliability of the experiments depend?

- Minimum error of the selected instruments.

- Absence of uncontrolled variability in the chosen research methodology.

- Researcher objectivity.

The main characteristic of reliability is obtaining the same results when conducting similar experiments multiple times. If all conditions are met correctly, the research methodology is credible.

Principles of interaction between validity and reliability when choosing research methods

The principles of interaction between reliability and validity are based on three main methods for establishing the reliability of the selected methodological framework.

- Repeated experiment method . The essence of the method is to repeatedly conduct the same experiments with the same instruments and compare the final results of the study in relation to the object/phenomenon/event under consideration.

- Alternative form method . It can be performed in two interpretations: one experiment is applied to several groups of objects, a combination of experiments is applied to one phenomenon.

- Subsampling method . To conduct a comprehensive study, one or more objects are grouped into separate samples with similar properties and characteristics.

Specifics and objectives of a psychological experiment

An experiment is aimed at proving or disproving a hypothesis and is always carried out under special experimental conditions artificially created by the researcher. The specificity of a psychological experiment lies in some of its subjectivity. Psychology confronts problems of subjectivity in theory and practice.

All knowledge obtained in the course of psychological research is, to one degree or another, subjective, distorted by the consciousness of the subject and the experimenter. Therefore, obtaining completely reliable knowledge is an extremely difficult task. The experiment must correspond to the purpose and objectives, exclude external influences and distortion of the results by the researcher.

The task of a psychological experiment is to make the psychological phenomena, properties, and states of the subject being studied accessible to observation. To do this, we use the construction of the conditions in which the test taker will find himself. These conditions should reveal the mental property being studied and exclude the manifestation of others.

A psychological experiment is distinguished by its dependence on the conditions and level of development of the phenomena being studied; it requires strict control, constancy of the process, and isolation of unnecessary aspects of the psyche from those being studied.

The high validity of the methodology used allows us to reduce the error of the results obtained. This is an important factor in psychological research because unreliable results are of no practical use.

External criteria and validity

In order to minimize the influence of external criteria on the quality of the test or method produced, and, accordingly, on the validity indicator, the following requirements apply to them:

- compliance with the direction in which the given research is being carried out;

- all participants must be in similar conditions and meet the specified parameters;

- the subject of research must be constant and reliable: not subject to sudden changes or changes.